Storring Sitecore Logs Using RabbitMQ Logstash Elasticsearch and Kibana (ELK)

In an earlier post I showed how to store Sitecore logs files in MongoDB see it here. Now I will demonstrate how to do the same thing using RabbitMQ, Logstash and Elasticsearch, and afterwards you can view and search in the logs using Kibana. It out of scope for this post to do any advance installation of the stack during this post. Also for the entire will be setup on localhost, if moved to a server additional configuration may be required.

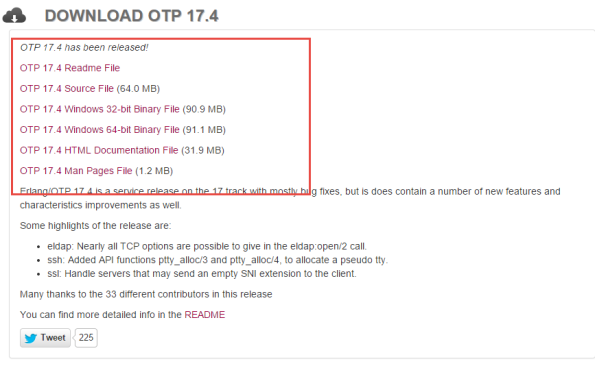

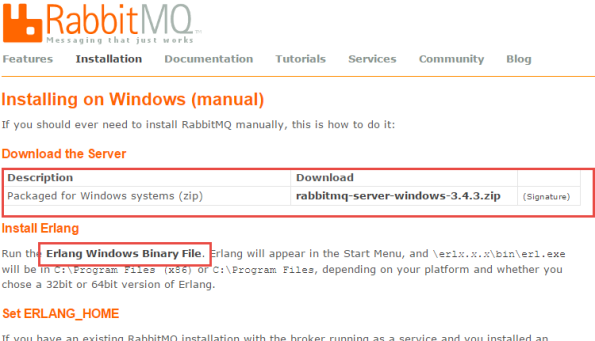

So let’s jump right in and get RabbitMQ installed. So go to and download RabbitMQ and Erlang. See the marked download links in image below-

https://www.rabbitmq.com/install-windows-manual.html

Erlang dowload

Start running the Erlang installer the installation should be next … next and done Next install RabbitMQ again next … next and done.

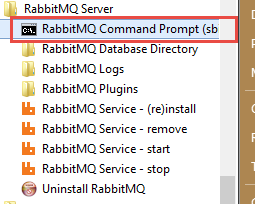

Now we need to install a add on to RabbitMQ this is easiest done by go through the windows start menu

In the command prompt copy in and hit enter

“rabbitmq-plugins enable rabbitmq_management”

Now you can navigate to http://localhost:15672/ The default username and password is guest and guest.

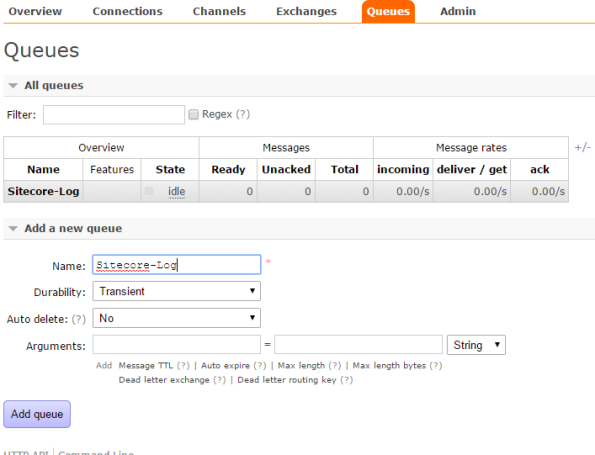

Go to the Queues tab and create a new queue call it Sitecore-Log and make it Transient

Perfect now with that in place lets get some code to push log entries to out newly created queue.

First lets create our Log appender

public class LogAppender : AppenderSkeleton

{

public virtual string Host { get; set; }

public virtual string UserName { get; set; }

public virtual string Password { get; set; }

public virtual string ExchangeName { get; set; }

public virtual string QueueName { get; set; }

protected override void Append(LoggingEvent loggingEvent)

{

var message = LogMessageFactory.Create(loggingEvent);

var messageBuffer = SerializeMessage(message);

PushMessageToQueue(messageBuffer);

}

private byte[] SerializeMessage(LogMessage message)

{

var serializedMessage = JsonConvert.SerializeObject(message);

byte[] messageBuffer = Encoding.Default.GetBytes(serializedMessage);

return messageBuffer;

}

private void PushMessageToQueue(byte[] messageBuffer)

{

RabbitQueue.GetInstance(Host,UserName,Password).Push(ExchangeName, QueueName, messageBuffer);

}

}

If created a simple LogMessage with the additional parameters that I want to log, like the Machine name ApplicationPoolNameand and the Sitename. So here is a factory tha can create a LogMessage from the LoggingEvent

public class LogMessageFactory

{

public static LogMessage Create(LoggingEvent loggingEvent)

{

loggingEvent.GetLoggingEventData();

var message = new LogMessage()

{

ApplicationPoolName = HostingEnvironment.SiteName,

InstanceName = Environment.MachineName,

Level = loggingEvent.Level.Name,

Message = loggingEvent.RenderedMessage,

Site = GetSiteName(),

Exception = GetException(loggingEvent),

TimeStamp = loggingEvent.TimeStamp

};

return message;

}

private static string GetSiteName()

{

return Sitecore.Context.Site != null ? Sitecore.Context.Site.Name : string.Empty;

}

private static string GetException(LoggingEvent loggingEvent)

{

if (!string.IsNullOrEmpty(loggingEvent.GetLoggingEventData().ExceptionString))

return loggingEvent.GetLoggingEventData().ExceptionString;

return string.Empty;

}

}

public class LogMessage

{

public string Level { get; set; }

public string Message { get; set; }

public string Source { get; set; }

public DateTime TimeStamp { get; set; }

public string ApplicationPoolName { get; set; }

public string InstanceName { get; set; }

public string Site { get; set; }

public string Exception { get; set; }

}

Next is the communication with the queue, This is done through a singleton pattern but use whatever implementation fit your purpose best.

public sealed class RabbitQueue

{

private static volatile RabbitQueue instance;

private static object syncRoot = new Object();

private static IModel _queue;

private static IBasicProperties _queueProperties;

public static string Host { get; set; }

public static string UserName { get; set; }

public static string Password { get; set; }

private RabbitQueue(string host, string userName, string password)

{

Host = host;

UserName = userName;

Password = password;

IntializeConnectionToQueue();

}

public static void IntializeConnectionToQueue()

{

var connectionFactory = new ConnectionFactory() { HostName = Host, UserName = UserName, Password = Password };

var connection = connectionFactory.CreateConnection();

_queue = connection.CreateModel();

_queueProperties = _queue.CreateBasicProperties();

_queueProperties.SetPersistent(false);

}

public static RabbitQueue GetInstance(string host, string userName, string password)

{

if (instance == null)

{

lock (syncRoot)

{

if (instance == null)

instance = new RabbitQueue(host,userName,password);

}

}

return instance;

}

public void Push(string exchangeName, string queueName, byte[] messageBuffer)

{

_queue.BasicPublish(exchangeName, queueName, _queueProperties, messageBuffer);

}

}

Now lets add the new appender to web.config, use something like this

<appender name="RabbitMqAppender" type="RabbitMQLog4NetAppender.LogAppender, RabbitMQLog4NetAppender">

<host value="localhost" />

<username value="guest" />

<password value="guest" />

<exchangeName value="" />

<queueName value="Sitecore-Log" />

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%4t %d{ABSOLUTE} %-5p %m%n %X{machine} " />

</layout>

<encoding value="utf-8" />

</appender>

Lets add out new log appender to the default file appender so we still have log files written to disk. This means that we can set the queue to be transient, and not persistent.

<root> <priority value="INFO" /> <appender-ref ref="LogFileAppender" /> <appender-ref ref="RabbitMqAppender" /> </root>

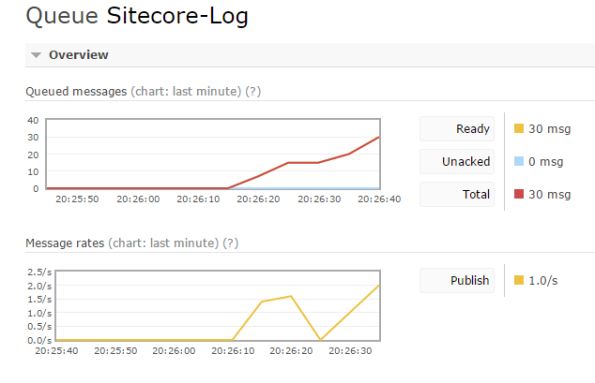

Now you can start up Sitecore and you should start to see Messages starting to build up in the queue

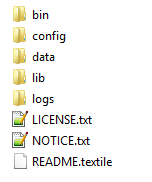

Wuhuuuu now lets start putting the messages into elasticsearch via Logstash. Go to http://www.elasticsearch.org/overview/elkdownloads/ and download elasticsearch, Kibana and Logstash . Now lets start with the setting up elastichsearch After you have extracted the zip file you should see a file structure like the one below. Before you start the server edit the file elasticsearch.yml placed in the config directory at the end of the files add “http.cors.enabled: true”. Now go to the bin directory and launch the elasticsearch.bat.

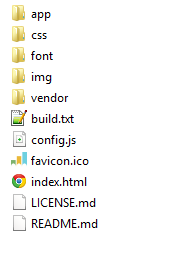

Next we need Kibana, Make a new site in IIS setting the root the kibana folder it should look something like this.

Now we are almost done last part is logstash so in the directory where you have extracted logstash go into the bin folder

Create a new file call it test.conf and put in the following content

input {

rabbitmq {

host => localhost

queue => "Sitecore-Log"

}

}

output {

elasticsearch {

host => localhost

}

}

Startup a cmd and go to the bin folder of logstash and run the following command

logstash-1.4.2\bin>logstash.bat agent -f test.conf

Now we done lets go into our kibana website and have a look Kibana comes with a default view for logstash See below.

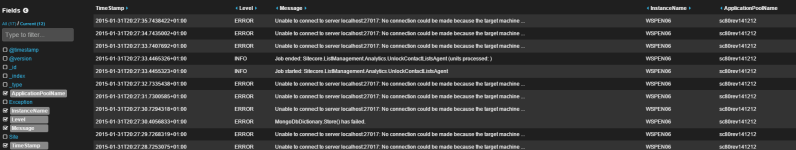

With that in place we can start seeing our log entries.

Choose what is shown by selecting on left side

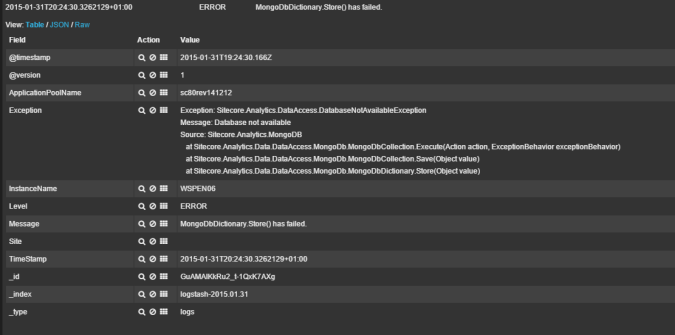

You can see details about individual message by click on them se example below

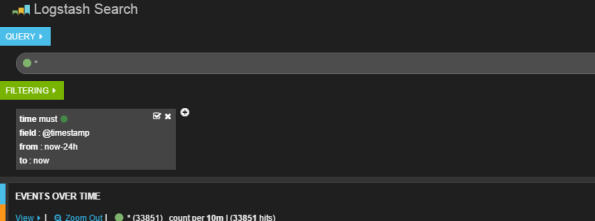

In the top you can add your own filtering and search in through the log entries

That was the first post in 2015, and the first post that for Sitecore 8.

Alexander Smagin have also done something like this see his blog here

This is not working for me. I have sitecore 8.2 solution

Error :

Unable to cast object of type ‘.RabbitMqAppender’ to type ‘log4net.Appender.IAppender’

Proberbly because Your derived class doesn’t derive from the mentioned log4net appender. Ie your logger.